Environment-aware Dynamic Partitioning of Neural Networks (EADP)

Introduction:

In real-time applications, sensors are deployed to continuously collect data in outdoor environments. This collected data is transmitted to an inference server, which hosts and executes a neural network model. The size of this data could be quite large depending on the circumstances and take a long time to transmit because transmission latency depends on both the quality of the communication channel and the size of the data being transferred. Degradation in channel quality can reduce data throughput, which can significantly impact the performance of the inference pipeline. We could solve this by having an edge device located close to the sensor running a neural network, but edge devices are not as powerful as a full fledged server and the neural network will have considerable performance impacts.

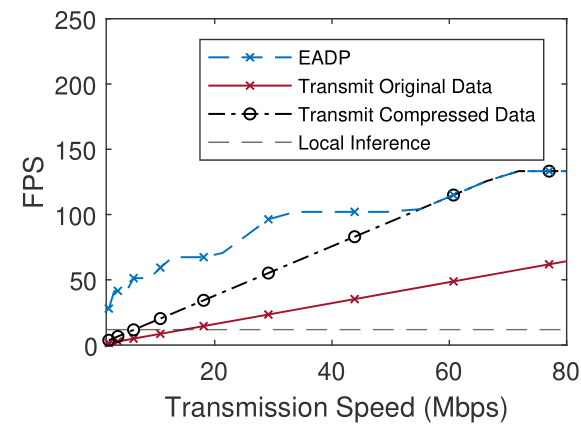

A neural network is simply composed a bunch of mathematical operations in series. We could run a part of this math on one device, save the intermediate result and run the remaining on another device. Moreover, when you pass data into a neural network, the size of the data first expands and then gets smaller as it passes through the layers of the neural network. In theory, we could leverage this downward size of the intermediate result and transmit it instead of having to either transmit the original data from the sensor to the server or having to process the entire neural network on an edge device close to the server.

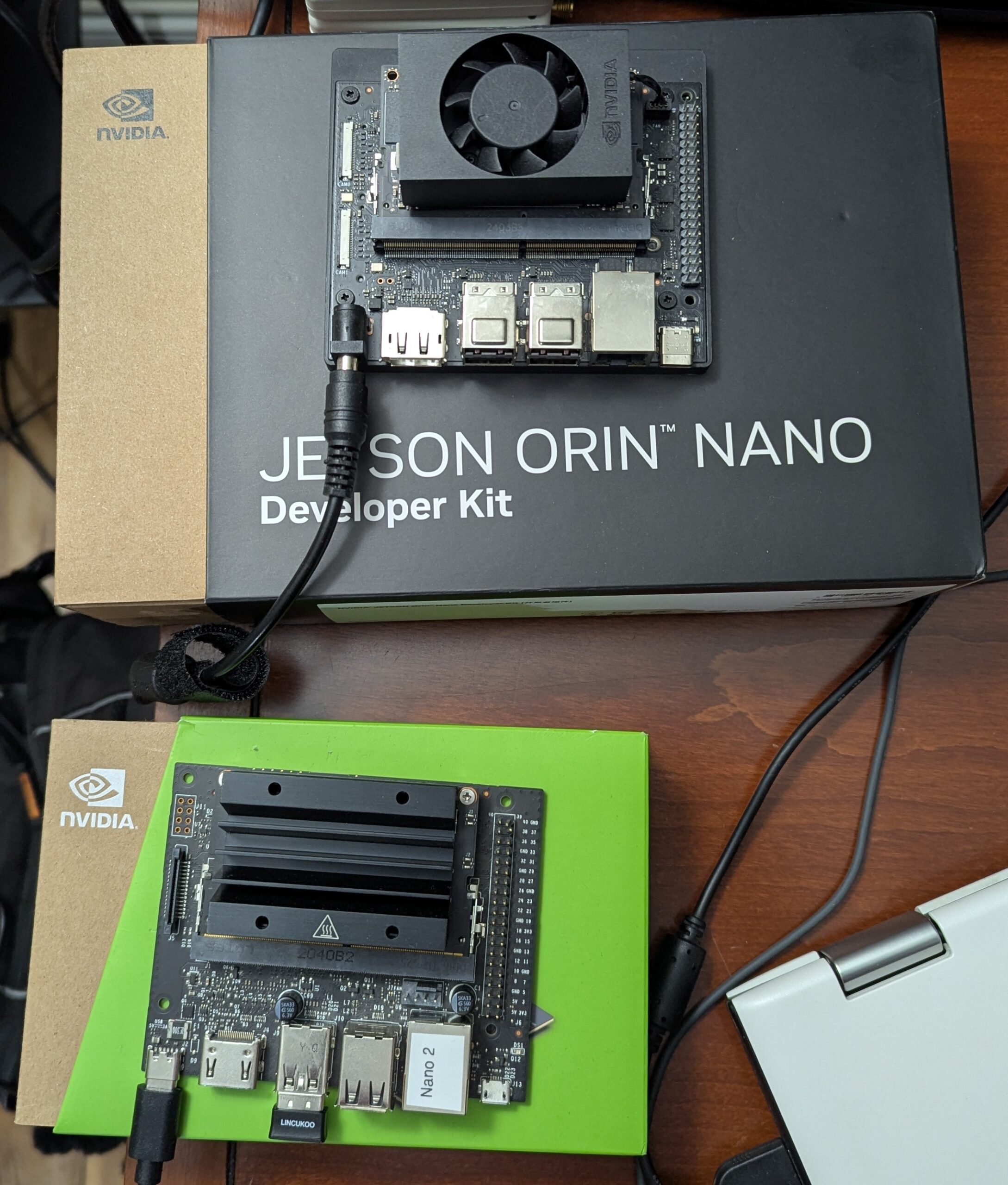

Setup:

Results:

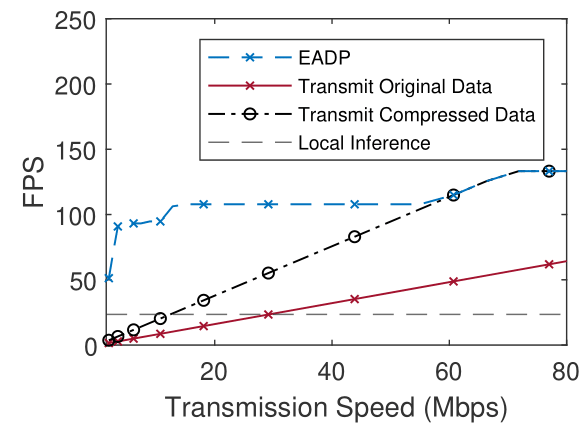

YOLOv8n: |

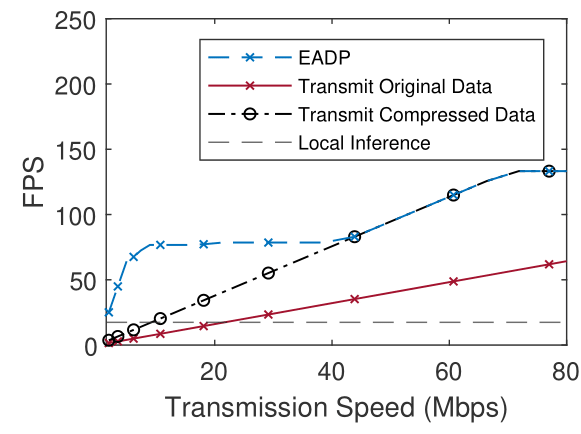

YOLOv8m: |

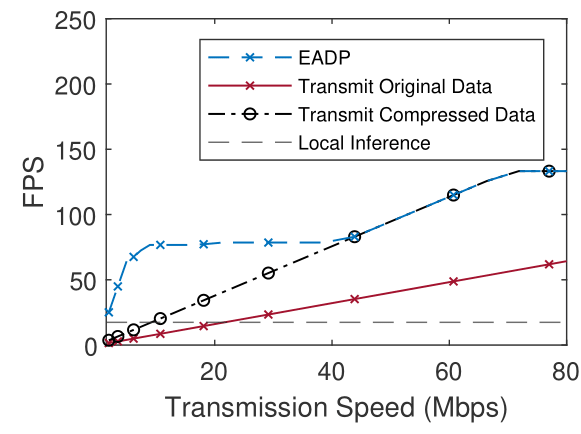

YOLOv8l: |

YOLOv3-tiny: |

These results are for the Jetson Orin Nano 8GB and we can see that Environment-aware Dynamic Partitioning significantly outperforms other techniques

Academic paper is currently in progress and will be available soon™.